Continuous Delivery

Continuous Delivery (CD - read the definition given by Martin Fowler) allows project leads and committers to configure automated processes to build and deploy their software. FINOS provides a dedicated OpenShift Online instance that can be used for this purpose.

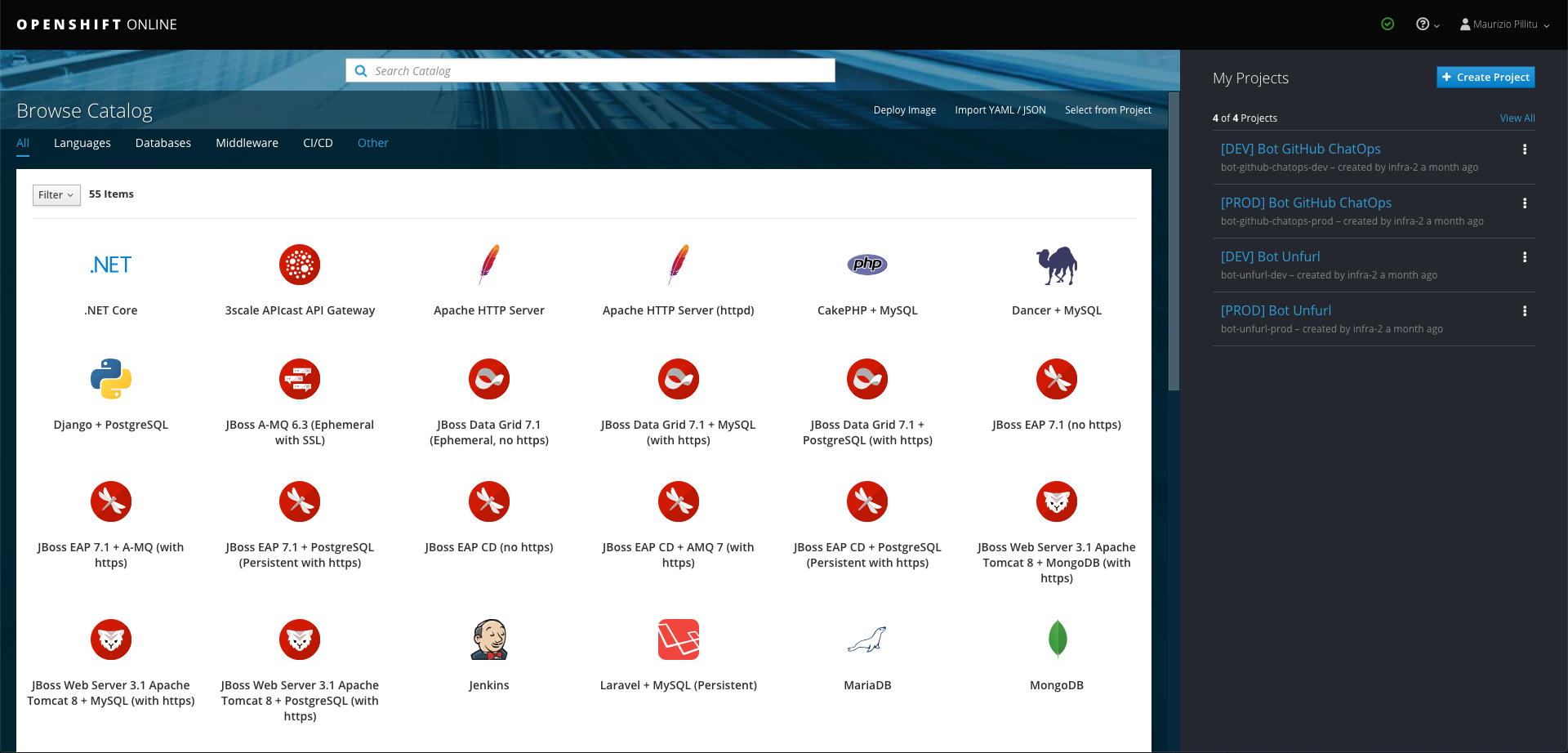

Openshift Console

The Open Developer Platform provides access to a FINOS OpenShift Container Platform instance (also called FINOS OpenShift Console), the leading enterprise Kubernetes distribution, so that developers can try out integrations with other containers, build and test containerised architectures and enable continuously deployed code build pipelines.

Access is granted (free of charge) to all FINOS project teams (see below); in this page we guide contributors to request access, understand the OpenShift FINOS setup and execute the first tests.

How to get access

In order to access the FINOS OpenShift Console, it is necessary to comply with the following requirements:

- You must be part of a FINOS project team

- You must have an account on developers.redhat.com; you can also sign up with your GitHub credentials

To request access, send an email to help@finos.org, with the following info:

- Name, surname and company

- Corporate email address

- GitHub ID

- Red Hat Login ID (when logged into Red Hat Developers portal, go to the account page)

- FINOS Project team you are part of

The FINOS Infra team will create a project for your project, grant permissions to your Red Hat user and notify you via email the link to access the FINOS OpenShift Console.

Travis CI to OpenShift integration

Travis CI configuration can be extended trigger the OpenShift image build and deployment; to make configuration easier, the Foundation provides a script called oc-deploy.sh that can be executed as after_success script and triggers a containerised deployment, using the OpenShift CLI tool; with a simple bash line, the following operations are performed:

- Install OpenShift CLI (oc) in the current environment (see

OC_VERSIONandOC_RELEASE) - Logs into OpenShift Online (see

OC_ENDPOINT) and select the right OpenShift project (seeOC_PROJECT) - Deletes - if

OC_DELETE_LABELis defined - all OpenShift resources marked with the specifiedkey=valuelabel - Processes - if

OC_TEMPLATEfile exists and is valid - and creates all OpenShift resources defined within; template parameter values can be passed using theOC_TEMPLATE_PROCESS_ARGSconfiguration variable - Triggers the build of an OpenShift image, identified by the

BOT_NAMEconfiguration variable, uploading the application binary as ZIP archive (seeOC_BINARY_ARCHIVE) or a folder (seeOC_BINARY_FOLDER) that have been generated by the project build; the folder is preferred over the archive binary distribution, as it's less likely to cause network timeouts during the upload

Below is the Travis configuration.

...

after_success: curl -s https://raw.githubusercontent.com/symphonyoss/contrib-toolbox/master/scripts/oc-deploy.sh | bash

Configuration

The oc-deploy.sh script requires some mandatory environment variables to be defined before executing the script; the mandatory ones are:

OC_TOKEN- The Openshift Online tokenBOT_NAME- the name of the BuildConfig registered in OpenShift, which is used to start the OpenShift buildOC_TEMPLATE- Path of an OpenShift template to (oc) process and create, if existent; defaults to .openshift-template.yamlOC_BINARY_ARCHIVEorOC_BINARY_FOLDER- Relative path to the ZIP file (or folder) to upload to the container as source- Any other environment variable containing template parameters, see

OC_TEMPLATE_PROCESS_ARGSbelow

Please review the default values of the following variables:

OC_DELETE_LABEL- Used to delete a group of resources with the same label; If set, the script will invoke oc delete all -l <OC_DELETE_LABEL>; example:OC_DELETE_LABEL="app=mybot"OC_TEMPLATE_PROCESS_ARGS- Comma-separated list of environment variables to pass to the OC template; defaults to null; exampleOC_TEMPLATE_PROCESS_ARGS="BOT_NAME,S2I_IMAGE"OC_VERSION- OpenShift CLI version; defaults to1.5.1OC_RELEASE- OpenShift CLI release; defaults to7b451fc-linux-64bitOC_ENDPOINT- OpenShift server endpoint; defaults tohttps://api.starter-us-east-1.openshift.comOC_PROJECT_NAME- The Openshift Online project to use; default isssf-dev, no changes neededOC_ENDPOINT- OpenShift server endpoint; defaults tohttps://api.pro-us-east-1.openshift.com, no changes neededSKIP_OC_INSTALL- Skips the OpenShift CLI (oc) installation; defaults to false; useful for localoc-deploy.shtest runs

The example below shows a pseudo CI configuration.

env:

global:

- BOT_NAME="mybot"

- OC_DELETE_LABEL="app=mybot"

- SYMPHONY_POD_HOST="foundation-dev.symphony.com"

- SYMPHONY_API_HOST="foundation-dev-api.symphony.com"

- OC_BINARY_FOLDER="vote-bot-service/target/oc"

- OC_TEMPLATE_PROCESS_ARGS="BOT_NAME,SYMPHONY_POD_HOST,SYMPHONY_API_HOST"

after_success: curl -s https://raw.githubusercontent.com/symphonyoss/contrib-toolbox/master/scripts/oc-deploy.sh | bash

Project setup

In order to configure Continuous Delivery, the project must meet few requirements and some configuration must be defined.

- Memory (size) and CPU (number) requirements must be known upfront

- The deployment strategy must be known upfront; default is RollingDeployment, which spins up a new container in parallel to the existing one, switches traffic when the new one is ready and finally kills the existing one.

- Collect all passwords and secrets that are needed by the applications to run; the Foundation Staff will register these entries as secrets in OpenShift and deliver secret key references

- The build process MUST generate a folder that: a. MUST contain all the artifacts to run the application; for Maven builds, the assembly plugin can be used b. MUST contain a (Unix) run script; for Maven builds, the appassember plugin can generate it c. MUST NOT contain any password, secret or sensitive data (like emails, names, addresses, etc) in clear text; OpenShift secrets provide a safe way to manage them

- Follow the instructions below to define an OpenShift template called

.openshift-template.yaml, in the root folder of the GitHub repository

Template definition

Below is described, section by section, an OpenShift template that defines

- The Docker image build process for the given app

- The image stream that triggers a deployment configuration when an image is created

- The deployment configuration that defines - among other things - the containers to run and its configuration

You will notice that all resources configured below define an app label with the same value, which allows to run commandline commands across all resources with the same label, for example oc delete all -l app=mybot

Template name and parameters

This is the header of the file, which defines the template name mybot-template and a list of parameters, like BOT_NAME; parameters must be exported as environment variables before invoking oc-deploy.sh (see above); from the objects: line below, the Openshift configuration resources are defined.

apiVersion: v1

kind: Template

metadata:

name: mybot-template

parameters:

- name: BOT_NAME

description: The Bot name

displayName: Bot Name

required: true

value: "mybot"

- name: SEND_EMAIL

description: Whether the bot should send emails or not; defaults to true

displayName: Send Email?

required: true

value: "true"

...

objects:

Images and streams

The OpenShift image creation process is carried by a container called deployer and decribed by the BuildConfig resource, which takes as parameters:

- a

sourceStrategy, which identifies the container image used to build the deployer container and points to theImageStreamwith names2i-java - the output image name and tag, which points to the

ImageStreamwith name${BOT_NAME}

See the example below.

- apiVersion: v1

kind: ImageStream

metadata:

labels:

app: ${BOT_NAME}

name: s2i-java

spec:

dockerImageRepository: "docker.io/jorgemoralespou/s2i-java"

- apiVersion: v1

kind: ImageStream

metadata:

labels:

app: ${BOT_NAME}

name: ${BOT_NAME}

spec: {}

status:

dockerImageRepository: ""

- apiVersion: v1

kind: BuildConfig

metadata:

name: ${BOT_NAME}

labels:

app: ${BOT_NAME}

spec:

output:

to:

kind: ImageStreamTag

name: ${BOT_NAME}:latest

postCommit: {}

resources: {}

runPolicy: Serial

source:

type: Binary

binary:

strategy:

type: Source

sourceStrategy:

from:

kind: ImageStreamTag

name: s2i-java:latest

triggers: {}

Deployment configuration

The DeploymentConfig resource defines:

- The deployment strategy, defaults to

Rolling - The container configuration

a. The image to use to create the container; this must match with the ImageStream output defined above

b. TCP/UDP ports to expose; in this case port

8080is open at container level c. ThereadinessProbedetects if the container is unhealthy d. Container environment variables can be defined in clear text (ieLOG4J_FILE) or loaded from a secret key reference; secrects are managed by the Foundation Staff and are normally used to manage credentials used to access the external APIs. - The deployment configuration

trigger, pointing to thelatesttag of an image called${BOT_NAME}

See the example below.

- apiVersion: v1

kind: DeploymentConfig

metadata:

labels:

app: ${BOT_NAME}

name: ${BOT_NAME}

spec:

replicas: 1

selector:

app: ${BOT_NAME}

deploymentconfig: ${BOT_NAME}

strategy:

rollingParams:

intervalSeconds: 1

maxSurge: 25%

maxUnavailable: 25%

timeoutSeconds: 600

updatePeriodSeconds: 1

type: Rolling

template:

metadata:

labels:

app: ${BOT_NAME}

deploymentconfig: ${BOT_NAME}

spec:

containers:

- image: ${BOT_NAME}:latest

imagePullPolicy: Always

name: ${BOT_NAME}

ports:

- containerPort: 8080

protocol: TCP

readinessProbe:

httpGet:

path: "/healthcheck"

port: 8080

initialDelaySeconds: 15

timeoutSeconds: 1

env:

- name: LOG4J_FILE

value: "/opt/openshift/log4j.properties"

- name: TRUSTSTORE_PASSWORD

valueFrom:

secretKeyRef:

name: ${BOT_NAME}.certs

key: truststore.password

...

...

...

triggers:

- type: ConfigChange

- imageChangeParams:

automatic: true

containerNames:

- ${BOT_NAME}

from:

kind: ImageStreamTag

name: ${BOT_NAME}:latest

type: ImageChange

status: {}

Service definition

In order to access the container port, it is necessary to define

- a Service that acts as load-balancer across all containers with app=${BOT_NAME} label

- a Route that registers to the OpenShift DNS and points to the Service

See the example below.

- apiVersion: v1

kind: Service

metadata:

annotations:

openshift.io/generated-by: OpenShiftNewApp

labels:

app: ${BOT_NAME}

name: ${BOT_NAME}

spec:

ports:

- name: healthcheck-tcp

port: 8080

protocol: TCP

targetPort: 8080

selector:

app: ${BOT_NAME}

deploymentconfig: ${BOT_NAME}

sessionAffinity: None

type: ClusterIP

status:

loadBalancer: {}

- apiVersion: v1

kind: Route

metadata:

name: ${BOT_NAME}

labels:

app: ${BOT_NAME}

spec:

to:

kind: Service

name: ${BOT_NAME}

weight: 100

port:

targetPort: healthcheck-tcp

wildcardPolicy: None

Useful commands

Below are some commands we found useful while setting up our Symphony bots on OpenShift Online, using the OpenShift CLI (oc).

# Login on FINOS OpenShift - copy from https://console.pro-us-east-1.openshift.com/console/command-line

export OC_TOKEN=...

oc login https://api.pro-us-east-1.openshift.com --token=$OC_TOKEN

# List of projects

oc projects

# Select a specific project; all next commands will refer to that project

oc project my_bot

# Show list of services, builds, pods and deployments for the current project

oc status

# Import an image from Docker Hub

oc import-image maoo/s2i-java-binary --all --confirm

# Processes an OpenShift template definition and passes parameter values

oc process -f bot_template.yaml -p BOT_NAME=my_botname | oc create -f -

# Start an OpenShift build, passing an archive or a folder

oc start-build my_botname --from-archive=./target/archive.zip --wait=true

oc start-build my_botname --from-dir=./target/my_botname --wait=true

# Deletes all resources with label 'app' equals to 'my_botname'

oc delete all -l app=my_botname